UsyBus

A Communication Framework among

Reusable Agents

integrating Eye-Tracking in Interactive Applications

integrating Eye-Tracking in Interactive Applications

What is UsyBus ?

UsyBus is a communication

framework for autonomous, tight coupling among reusable agents.

Agents may be responsible for collecting data from eye-trackers,

analyzing eye movements, and managing communication with other

modules of an interactive application.

The UsyBus model is a

multi-agent software architecture where agents interact with each

other in an autonomous way. UsyBus adopts a multiple-input

multiple-output (MIMO) paradigm: any UsyBus agent can send data to

the data exchange bus via one or many channels, and receive data

in the same way. Communication channels are defined by UsyBus data

types.

Agents connected to UsyBus

can emit and retrieve data on the data exchange bus without taking

care about other agents, thus remaining autonomous. In other

words, sending agents do not need to know to which agent data is

sent, and receiving agents do not need to know from which agent

the data is coming from.

For example, one sending

agent retrieves raw oculometric data from an eye-tracker and sends

formatted gaze point data, another sending/receiving agent

receives these points, calculates the fixations, and sends these

fixations, and at last, another receiving agent retrieves all

these data and displays them.

Framework

Datagram

Data transmitted in messages

that are exchanged between UsyBus agents must respect the UsyBus

datagram format. The datagram is structured into two parts: the

header that contains metadata, such as the version of the bus, the

type of data and the origin of the data, and the payload that

contains the data to be processed by the receiving agent(s).

Datagram structure uses a

JSON-inspired format:

UB2;type=data-type(:data-subtype(s))*;from=sender-application(;variable=value)+ All fields are mandatory and

must be completed in the order indicated:

separated (by convention) from the type by the colon character ":" which is a reserved

character (by convention) for types and sub-types.

several data are transmitted, the separator character between these data is the semicolon

character ";" which is a reserved character in the UsyBus datagram.

- UB2- type: defines the type of data, possibly with one

or more sub-types indicated after andseparated (by convention) from the type by the colon character ":" which is a reserved

character (by convention) for types and sub-types.

- from- The following fields define the data. The data

format is of the form variable=value. Ifseveral data are transmitted, the separator character between these data is the semicolon

character ";" which is a reserved character in the UsyBus datagram.

- There is no terminator character in the message.To avoid compatibility issues

with charsets, UsyBus datagram uses only US-ASCII characters. The

semicolon character ";" is a reserved character and should never

be used outside of its use as a separator.

The POSIX regular expression

used to recognize a syntactically valid UsyBus datagram is:

UB2;type=[^;]+;from=[^;]+(;[^;]+=[^;]+)+Messaging system

UsyBus agents use the

open-source Ivy software library (https://www.eei.cena.fr/products/ivy/)

as messaging library. The Ivy’s authors describe it as "a simple

protocol and a set of open-source (LGPL) libraries and programs

that allows applications to broadcast information through text

messages, with a subscription mechanism based on regular

expressions".

The implementation of UsyBus

uses the binding mechanism of Ivy, limiting it to the header

part of messages defining their type. Any UsyBus agent could be implemented directly with the Ivy library while respecting the UsyBus framework. However, to support the UsyBus use with Ivy, specific UsyBus libraries are implemented in Java. Any other language can implement UsyBus, providing the Ivy library is available for that language (e.g., Python, C++).

part of messages defining their type. Any UsyBus agent could be implemented directly with the Ivy library while respecting the UsyBus framework. However, to support the UsyBus use with Ivy, specific UsyBus libraries are implemented in Java. Any other language can implement UsyBus, providing the Ivy library is available for that language (e.g., Python, C++).

Main Data Types

If the datagram format is the

corner stone of the UsyBus framework, the data types must be

considered as keystones. As developers can create UsyBus agents

with no knowledge about the agents to which they will be

connected, the definition of UsyBus data types must be precise and

unambiguous both syntactically and semantically. Incorrect or

incoherent definitions of data types may produce communications

mismatches between agents in the dataflow, and as a consequence,

unexpected behaviors of applications. This is why a significant

effort must be devoted to the specification and the documentation

of data types. Main data types are documented below.

Remark: In almost all

data types, a "device" parameter distinguishes different

transmitters providing the same type of data to guarantee

unicity. In the context of eye-tracking, the "device" parameter

represents the name of the surface monitored by an eye-tracker

that the user is looking at. Usually, there is only one "device"

per eye-tracker and per experiment, and its name is the DNS

short name of the computer that host the eye-tracker controller.

In some cases, there may be several "devices" per experiment

(when there are several eye-tracker) or per eye-tracker (when an

eye-tracker can monitor several surfaces).

Point

This type of message is usually sent by an eye-tracker

controller. This data type describes the point the user is

looking at on the screen for a defined timecode. The point is

sent only if the coordinates are considered reliable by the

eye-tracker. It consists of the gaze coordinates in pixels on

the screen where the user’s eyes are looking at. The type also

has an optional fixed information specifying whether the gaze

point belongs to a fixation.type=eyetracking:point

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

Integer x x-axis coordinate of the point in the screen (pixels)

Integer y y-axis coordinate of the point in the screen (pixels)

Boolean fixed (optional) fixation indication (true or false)

Pupils Sizes

This type of message is usually sent by an eye-tracker

controller. This data type describes the diameters of the two

pupils of the user for a defined timecode. The diameters values

are expressed in the device-specific format of the eye-tracker

system, and should be considered as relative to the distance

between the user and the eye-tracker, unless otherwise

indicated.type=eyetracking:pupils

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

Double left left pupil diameter (device-specific format)

Double right right pupil diameter (device-specific format)

Time

This type of message is usually sent by an eye-tracker

controller. This type represents the current time of the

eye-tracker device real time clock. The value may be different

than the host computer system time. This message may be used for

synchronization purposes. The device parameter is not required

since the real time clock is not linked with a specific screen.type=eyetracking:time

Long tc timecode (milliseconds since the epoch)

Device

This type of message is usually sent by the system that manages

the screen seen by the user. This type describes the dimensions

of the screen the user is looking at. This message is usually

sent at the beginning of data collection to setup graphical

monitoring systems.type=eyetracking:device

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

Integer width width of the screen (pixels)

Integer height height of the screen (pixels)

Fixation

This type of message is usually sent by the eye movement

analysis system. This type describes a fixation in the sense of

the result of the analysis of eye movements. The type contains

the position of the fixation on the screen, the mean radius, the

maximum radius, and the duration of the fixation. Fixations are

detected by a selectable ad-hoc algorithm. The timecode refers

to the first point that belongs to the fixation.type=eyetracking:fixation

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

Integer x x-axis coordinate of the center of the fixation in the screen (pixels)

Integer y y-axis coordinate of the center of the fixation in the screen (pixels)

Integer meanradius mean radius of the fixation (pixels)

Integer maxradius maximum radius of the fixation (pixels)

Long duration duration of the fixation (milliseconds)

Zone

This type of message is usually sent by the target application

that implements the user interface seen by the user. The zone

represents an area of interest to analyse. Four types of zones

are defined: rectangular, circular, elliptical, and punctual.

Two virtual types of zones are defined: zone-to-remove and

zone-to-remove-all to indicate the disappearance of one or all

zones respectively. The type contains the position, the

dimensions, and the name of the zone. The name of the zone must

be unique for a specific device. The optional widget parameter

can be used for the expanded eye-tracking monitor.type=eyetracking:pupils

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

String type (ZoneCircle, ZoneEllipse, ZonePoint, ZoneRectangle, ZoneToRemove, or ZoneToRemoveAll) type of the zone (enumeration)

String name (for all but ZoneToRemoveAll) name of the zone (alphanumeric)

String widget (optional / for all but ZoneToRemoveAll) widget type of the zone (alphanumeric)

Integer x (for ZoneCircle, ZoneEllipse, ZonePoint) x-axis coordinate of the center (pixels)

Integer y (for ZoneCircle, ZoneEllipse, ZonePoint) y-axis coordinate of the center (pixels)

Integer x1 (for ZoneRectangle) x-axis coordinate of the upper left corner (pixels)

Integer y1 (for ZoneRectangle) y-axis coordinate of the upper left corner (pixels)

Integer x2 (for ZoneRectangle) x-axis coordinate of the lower right corner (pixels)

Integer y2 (for ZoneRectangle) y-axis coordinate of the lower right corner (pixels)

Integer r (for ZoneCircle) radius of the circle (pixels)

Integer a (for ZoneEllipse) demi-width of the ellipse (pixels)

Integer b (for ZoneEllipse) demi-height of the ellipse (pixels)

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

String type (ZoneCircle, ZoneEllipse, ZonePoint, ZoneRectangle, ZoneToRemove, or ZoneToRemoveAll) type of the zone (enumeration)

String name (for all but ZoneToRemoveAll) name of the zone (alphanumeric)

String widget (optional / for all but ZoneToRemoveAll) widget type of the zone (alphanumeric)

Integer x (for ZoneCircle, ZoneEllipse, ZonePoint) x-axis coordinate of the center (pixels)

Integer y (for ZoneCircle, ZoneEllipse, ZonePoint) y-axis coordinate of the center (pixels)

Integer x1 (for ZoneRectangle) x-axis coordinate of the upper left corner (pixels)

Integer y1 (for ZoneRectangle) y-axis coordinate of the upper left corner (pixels)

Integer x2 (for ZoneRectangle) x-axis coordinate of the lower right corner (pixels)

Integer y2 (for ZoneRectangle) y-axis coordinate of the lower right corner (pixels)

Integer r (for ZoneCircle) radius of the circle (pixels)

Integer a (for ZoneEllipse) demi-width of the ellipse (pixels)

Integer b (for ZoneEllipse) demi-height of the ellipse (pixels)

Fixation in Zone

This message is usually sent by the eye movement analysis

system. The fixation in zone type describes the zone that a user

fixed during a certain amount of time.type=eyetracking:fixinzone

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

String name name of the zone (alphanumeric)

Long duration duration of fixation in the zone (milliseconds)

Cognitive Load

This type of message is usually sent by an eye-tracker

controller. The type represents the data of the right and left

Index of Cognitive Activity (ICA).type=eyetracking:load

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

Double lICA left index of cognitive activity (numeric in 0..1)

Double rICA right index of cognitive activity (numeric in 0..1)

Task

This type of message is usually sent by the cognitive load

monitor. The type represents the current user task.type=eyetracking:task

Long tc timecode (milliseconds since the epoch)

String device name of the device (alphanumeric)

String taskname name of the task (alphanumeric)

Reusable Agents

This

part is still under construction:

the source code license is to be determined

the source code license is to be determined

A substantive set of UsyBus

agents has been developed within research and development

projects. Agents that are reusable across projects are listed

below. All UsyBus agent required the Ivy library (https://www.eei.cena.fr/products/ivy/).

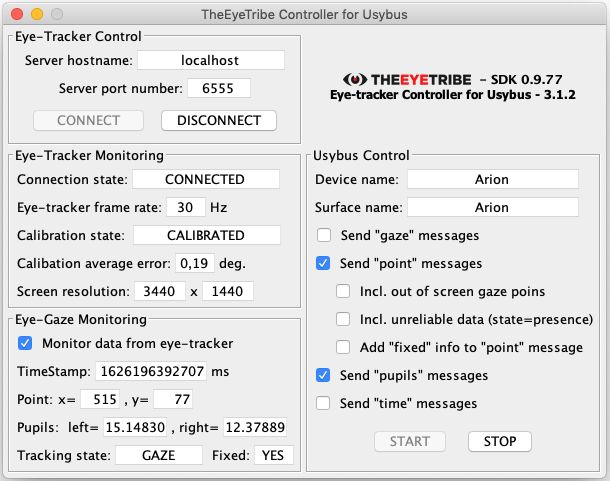

The Eye Tribe Controller

The Eye Tribe Controller interfaces any “Eye Tribe”

(https://theeyetribe.com) eye-tracker device to the data

exchange bus.Data types received: none

Data types sent:

- eyetracking:point

- eyetracking:pupils

- eyetracking:time

- eyetracking:device

User interface:

Executable: under construction

Source code: under construction

Required software: Eye Tribe SDK (https://github.com/eyetribe/sdk-installers/releases)

Widget Tracker Library

The Widget Tracker library is a utility library that tracks the

positions of the widgets in a graphical user interface, and

sends the geometry of these widgets as Zone messages on the bus.

The library is not, strictly speaking, an agent since is it not

a stand-alone application, but the library, when used by an

application, enables that application to automatically create an

agent on the bus. The library is compatible with any application

that uses the Java Swing graphical library.Data types received: none

Data types sent:

- eyetracking:zone

User interface: N/A

Executable: N/A

Source code: under construction

Eye Tracking Analyzer

The Eye Tracking Analyzer is composed of two virtual agents, an

Eye Gaze Fixations Filter and a Fixations in Zones Detector,

which share the same user interface. The Eye Gaze Fixations

Filter agent captures data concerning eye gaze points and

returns new data concerning the eye fixations to the bus. The

analysis can be performed with several algorithms such as the

Dispersion-Threshold Identification (I-DT) algorithm. The

Fixations in Zones Detector agent captures the fixations and

zones, and returns the fixations in zones, that is which zones

have been fixed, and for how long time the fixation lasts in

them.Data types received:

- eyetracking:point

- eyetracking:zone

Data types sent:

- eyetracking:fixation

- eyetracking:fixinzone

User interface:

Executable: under construction

Source code: under construction

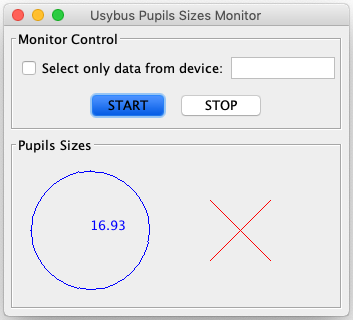

Pupils Sizes Monitor

The Pupils Sizes Monitor agent is a simple monitoring agent

displaying the pupil size for both eyes. This agent is often

used to monitor whether the eye-tracker "sees" the user, since

pupil size is the first data an eye-tracker captures when

detecting user’s eyes.Data types received:

- eyetracking:pupils

Data types sent: none

User interface:

Executable: under construction

Source code: under construction

Eye Tracking Monitor

The Eye Tracking Monitor agent is a monitoring agent that

displays in real time the gaze points, the fixations, the zones,

and the fixations in the zones. As the Eye Tracking Monitor

agent displays almost all eye-tracking related data that are

available on the bus, it is primarily used for debugging or for

monitoring an experiment. Data types received:

- eyetracking:point

- eyetracking:fixation

- eyetracking:zone

- eyetracking:fixinzone

- eyetracking:device

Data types sent: none

User interface:

Executable: under construction

Source code: under construction

Reference Article:

Francis Jambon and Jean Vanderdonckt. 2022. UsyBus: A Communication

Framework among Reusable Agents integrating Eye-Tracking in

Interactive Applications. Proc. ACM Hum.-Comput. Interact. 6, EICS,

Article 157 (June 2022), 36 pages. https://doi.org/10.1145/3532207About Us...

Francis JAMBONLaboratoire d'Informatique

de Grenoble (LIG)

Université Grenoble Alpes

https://lig-membres.imag.fr/jambon/

Jean VANDERDONCKT

Louvain Research Institute in Management and Organizations (LouRIM)

Université catholique de Louvain

https://uclouvain.be/fr/repertoires/jean.vanderdonckt